Project Details

- Focus : Applications, Collection

- Research : Behavior analytics

- Use Case : Assessing personnel skills

- Use Case : Automatic data annotation

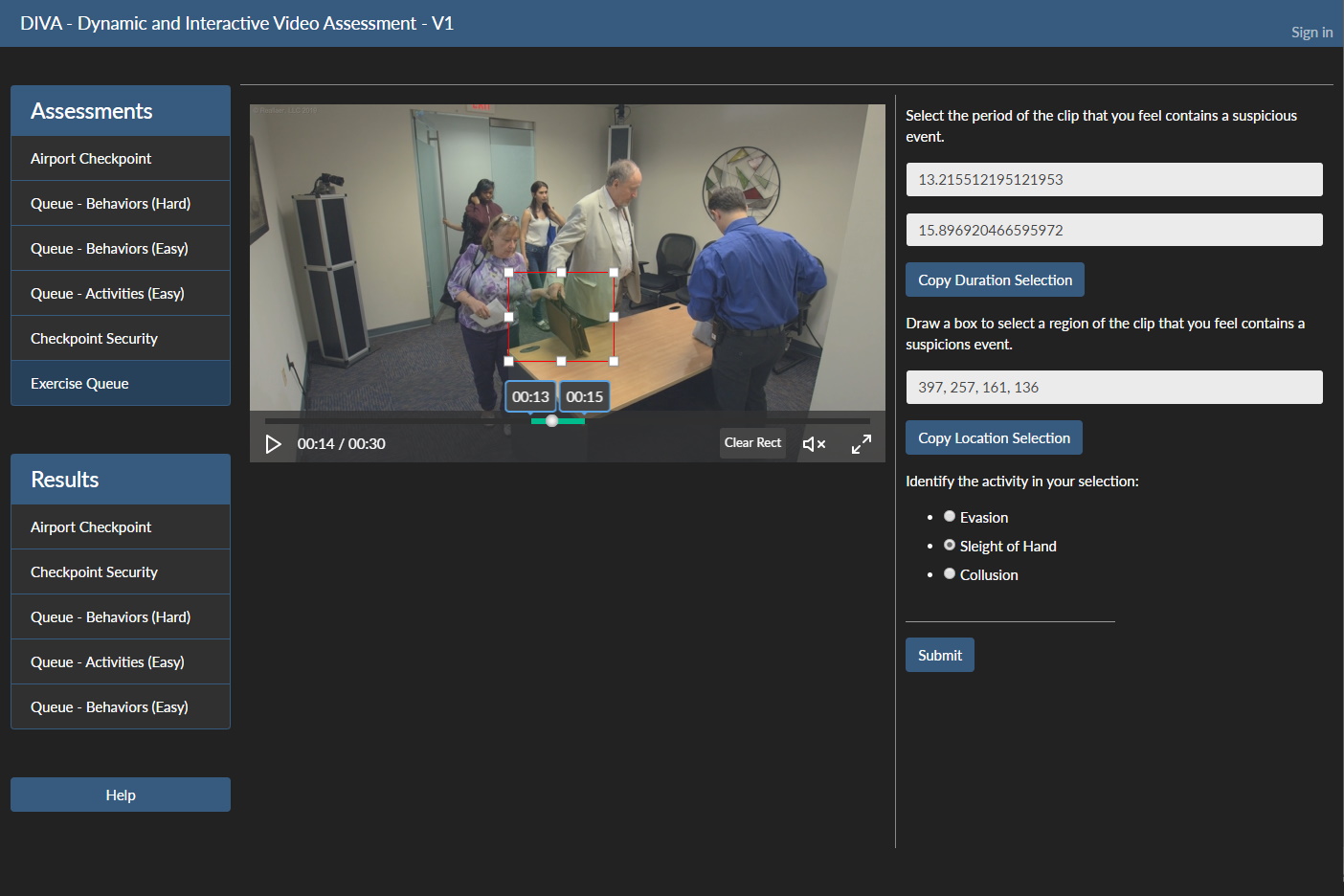

Dynamic and Interactive Video Assessment

There exists a need to quickly assess personnel’s ability to locate and identify behavior related events in video data for evaluating career progression. Current evaluation methods can be limited when examining only the live environment and metrics found within, such as behavioral detections or false alarms in an operating queue. The challenge associated with data collection in the field makes it tricky to engage in ongoing assessment of skills, evaluation of personnel, and diagnosis of results.

We have created local thick-client based solutions to aid in marking up videos with behaviors and other facial related cues in the past. Our experience in managing this data, alongside these annotation tools, has paved the way to start researching a platform that opens the doors on creating an assessment process that reacts to a client’s needs. One of our most frequently requested need is for an application to not be locked to the local PC. In response, we have created a web application to fill these needs.

Custom questionnaires can be created through our backend interface, videos can be associated with a questionnaire from our vast behavior libraries, and questionnaires can be composed together to create an overall assessment. User management modules allow for standing up an assessment workflow where evaluated users can login to our web application and interact with videos using on-screen tools to submit answers, or they can provide your typical text/multiple choice based answers as well.

Annomatix

While manual markup of video is useful for evaluating user’s detection skills, there will always be a need to do this automatically. In previous research, we have worked alongside many universities in developing classifiers to detect various behaviors using the Facial Action Coding System (FACS). While we were integrating these classifiers into an overall detection system, we collected additional training data and accelerated the overall training process. The fielding of this solution had an extremely large foot print as it was intended for border crossings and various transportation queues, and was largely constrained to the domain’s hardware.

Reallaer researched a web-application based solution that utilizes a consumer-grade web camera to record a user’s face while interacting with some visual stimulus. We experimented with watching television advertisements to gauge user reactions. Rather than manually inspecting each user’s video one by one, a set of classifiers were trained and implemented to grade these videos on a range of emotions associated with the upper and lower face. The overall process of training these classifiers, running them on video collected from a web application, remains as one of our first ventures into the cloud and in-house training of machine learning algorithms.